- #HOW TO INSTALL APACHE SPARK FOR PYTHON HOW TO#

- #HOW TO INSTALL APACHE SPARK FOR PYTHON CODE#

- #HOW TO INSTALL APACHE SPARK FOR PYTHON DOWNLOAD#

Has examples which are a good place to learn the usage of spark functions. Holds all the changes information for each version of apache spark Holds important startup scripts that are required to setup distributed cluster Holds important instructions to get started with spark Holds the prebuilt libraries which make up the spark APIS Holds the scripts to launch a cluster on amazon cloud space with multiple ec2 instances Holds all the necessary configuration files to run any spark application On decompressing the spark downloadable, you will see the following structure:

#HOW TO INSTALL APACHE SPARK FOR PYTHON DOWNLOAD#

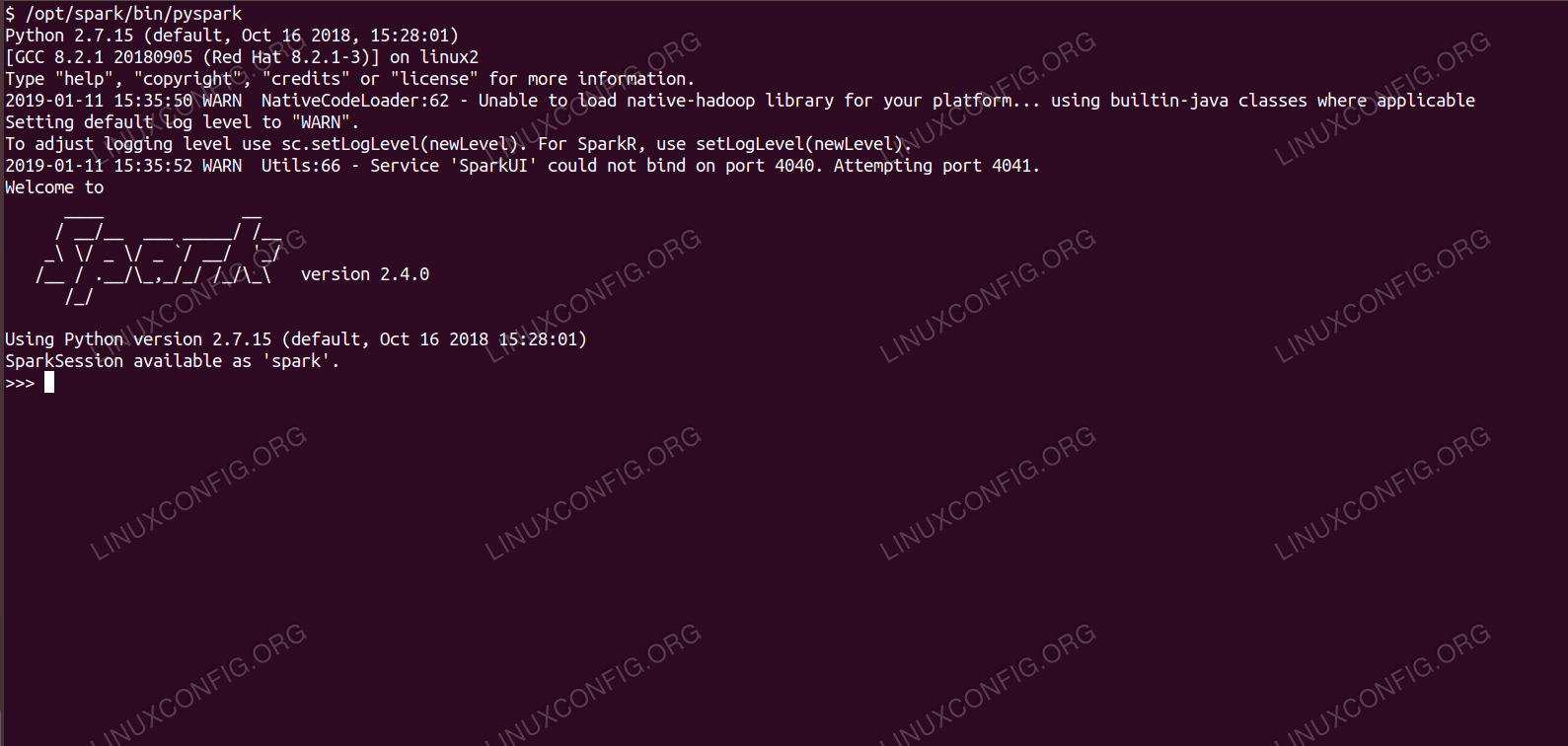

To get started in a standalone mode you can download the pre-built version of spark from its official home page listed in the pre-requisites section of the PySpark tutorial. As we know that each Linux machine comes preinstalled with python so you need not worry about python installation. The shell for python is known as “PySpark”. To use PySpark you will have to have python installed on your machine. PySpark helps data scientists interface with Resilient Distributed Datasets in apache spark and python.Py4J is a popularly library integrated within PySpark that lets python interface dynamically with JVM objects (RDD’s).Īpache Spark comes with an interactive shell for python as it does for Scala. The open source community has developed a wonderful utility for spark python big data processing known as PySpark.

#HOW TO INSTALL APACHE SPARK FOR PYTHON CODE#

Taming Big Data with Apache Spark and PythonĪpache Spark is written in Scala programming language that compiles the program code into byte code for the JVM for spark big data processing.

#HOW TO INSTALL APACHE SPARK FOR PYTHON HOW TO#

This spark and python tutorial will help you understand how to use Python API bindings i.e. What am I going to learn from this PySpark Tutorial for Beginners?

0 kommentar(er)

0 kommentar(er)